The Overfit Theory of Everything

What is the point of dreams, fiction, art, novelty, even boredom? 🧚♂️

The most fascinating mysteries are extraordinarily general. Beauty is one: we can find virtually anything beautiful, from people to stories to mathematical proofs, and that makes it challenging to explain the phenomenon. Dreams are another: we can dream about anything, from scary monsters to erotic scenarios; our dreams are sometimes obviously connected to our waking life, and sometimes not at all; everyone dreams, every night; and many other animals dream as well, including, as far as we know, all mammals, and possibly birds. So, why do we dream?

Blogger extraordinaire and neuroscientist Erik Hoel has a hypothesis. All mainstream theories of dreaming, he says, have some problems: dreams aren’t actually great at emotional regulation, memory consolidation, or selective forgetting, which have all been proposed as the purpose of dreams. Nor is the default hypothesis — that dreams serve no function, and are just a byproduct of sleep or something else — particularly plausible, considering that they are universal in mammals and apparently necessary for good health. Instead, Erik suggests, dreams have probably evolved to prevent overfitting.

Overfitting is a concept from data science and machine learning. Because it is central to this post, let’s take a moment to explain what it means. This is going to involve math, but only in a very gentle way.

(If you already know what overfitting is, then you can skip the next section. I’m aiming for “insultingly simple explanation,” and I wouldn’t want you to feel insulted.)

I. In which overfitting is explained in an insultingly simple manner

The basic idea of machine learning is that you give a bunch of data to a machine (say, cat images from the internet), you give it a bunch of random numbers (literally), and then you make the machine tweak those numbers according to the data until they aren’t random anymore. Once that’s done, your machine can do useful things — like identifying cats in images, or even generating new ones.

The numbers, which are random at the start and optimized to the data at the end, are collectively called a “model.” “Model” is a stupid, non-descriptive word that took me years to get used to, but we can’t really avoid it since nobody realizes it’s a vague word and everyone uses it all the time. In an attempt to clear any possible confusion, just remember that a model is merely a bunch of numbers organized in some way, such that you can give it some input and receive some output.

For an image generation task, models tend to be complicated, gigantic arrays of numbers in files that weigh several gigabytes. For a simpler task, the model might just be a small mathematical equation, like y=-2x+3, where x is the input, y is the output, and -2 and 3 are the numbers we’re trying to find.

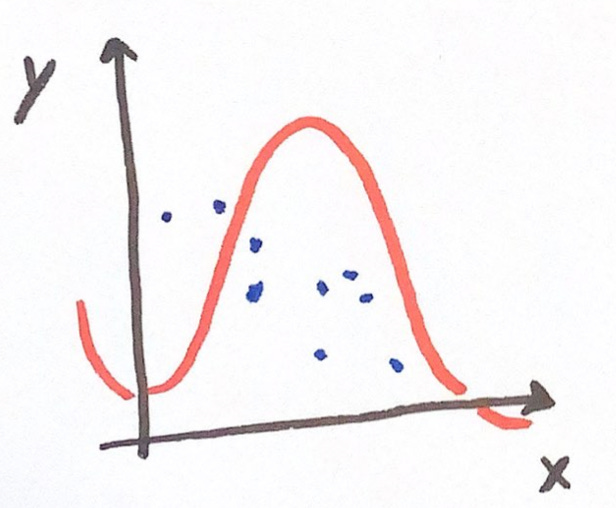

In real life, there’s always a bit of randomness (we call it “noise”), and models are always imperfect. The goal is just to find a model that works reasonably well with the current data and with future new data. So, suppose a natural phenomenon — in this case, me writing this post — generated some data from the y=-2x+3 equation, with added noise. The data we have might look like this:

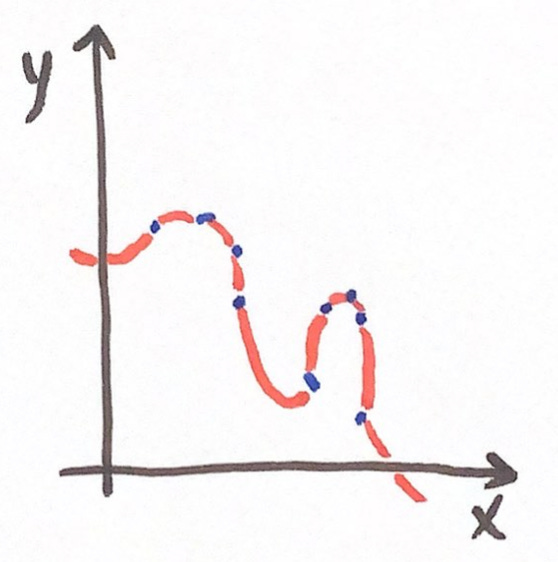

You know that the simple y=-2x+3 equation, which describes a straight line in the same orientation as the cloud of points above, is a good model for this, because I just told you. But your machine doesn’t know this (yet). It starts with random numbers, say y=0.736x-12.503, or even a more complicated model with several more random numbers and which isn’t a straight line, e.g.:

Then you tell the machine: adjust your numbers so that they fit the data better, repeatedly. Okay, says the machine, and it does this a few times, until it lands on something like y=-1.9996x+3.002:

Nice! We have found a good model! But wait: this is not a perfect fit yet. Most of those data points aren’t on the line. (That’s just due to the noise, but the machine doesn’t know that.) So it keeps going, adjusting the numbers of its arbitrarily complex model, until:

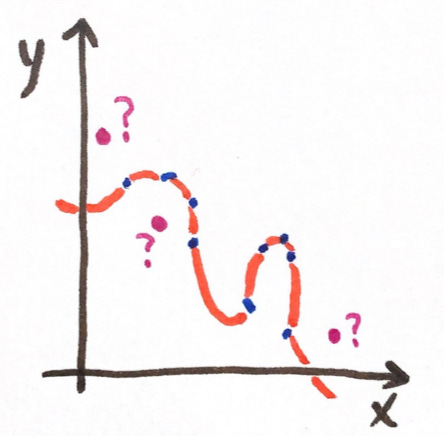

Umm. It’s true that this fits the data very well, but… well, since the original data were generated by a phenomenon that is correctly modelled by the y=-2x+3 equation (plus some noise), then this fit is actually pretty bad, despite its apparent perfection. Add a few new data points, and you immediately see that the model doesn’t make good predictions at all.

This is what overfitting is. It’s a failure of generalization. It is over-optimization. It is what happens when you learn too well.

II. In which the analogy between dreams and data augmentation is elaborated upon

Dreams, Erik Hoel points out, have three important qualities: they’re sparse, hallucinatory, and narrative.

They’re sparse in that they’re not a full resolution simulation of reality. Small details rarely occur in dreams, and you generally can’t, for instance, read text or use a phone with tiny icons. Your brain is not trying to reproduce everything. It’s painting an imaginary landscape in broad strokes.

They’re hallucinatory in that they stage objects, people, and events, as well as combinations of those, that you have not actually seen or experienced — and often that you cannot experience at all since they’re physically impossible.

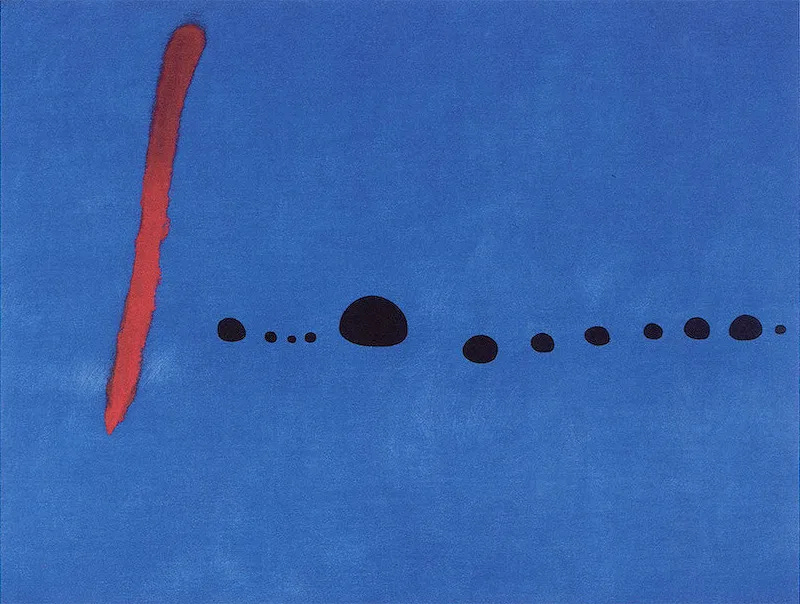

Interestingly, these two characteristics closely match two strategies that machine learning programmers use to avoid overfitting. One such strategy is dropout: it means removing random parts of the machine learning system to make sure its specific architecture doesn’t fit the data too closely. This is analogous (says Erik) to the sparseness of dreams. But more to the point, in my view, is another strategy, data augmentation, which I’ll illustrate with this collage involving my late family cat:

As you can see, data augmentation involves creating slightly altered versions of a same item — in this case a picture, but it could be other kinds of data. Each altered picture is still recognizable as a cat, so we do want the ML system to recognize them all. We don’t want the system to wrongly learn that the cat must be in a specific position or have a specific color.

This plausibly matches the tendency of dreams to be nonsensical hallucinations, where you witness e.g. a meteorite landing in your childhood home’s backyard, but it’s actually a rock that had been put in orbit in 1951, and attached to the rock is a quarter-full bottle of “cinnamon beer” and a multilingual note explaining why, which was something about a bet during a card game. (This is from an actual dream I logged a while ago.)

Erik explains that the sparse and hallucinatory nature of dreams spells trouble for most other theories. For instance, it makes very little sense that dreams would help with consolidating memories. If that were their purpose, they’d be way more faithful to the actual waking experience they’d be supposed to help remember. Instead, sparseness and hallucination are consistent with avoiding overfit and helping your brain generalize.

But generalize about what? This is where the third quality comes in: dreams are narrative. They’re stories. They’re sequences of events that make (some!) sense.

Stories are the way we understand the world. As you go about your waking life, you experience events that you naturally tend to connect together into a coherent structure. When you read or hear about events happening to other people, you naturally pay more attention if they’re told as a story rather than disconnected occurrences.

A machine learning model can be designed to generate art, or answer questions, or recommend the next Netflix movie you should watch. Each needs to be trained with a specific type of data: pictures from the internet, large text corpora, or tables of Netflix users and what films they previously liked. You, presumably a human reader, are designed to understand and generate stories. So you need to be trained on stories. Most of your training data comes from the events you naturally experience during your waking life, every day — but each night, as you rest, your brain takes the opportunity to perform data augmentation, making sure you don’t learn from your waking life too well.

III. In which fiction is likened to artificial dreams

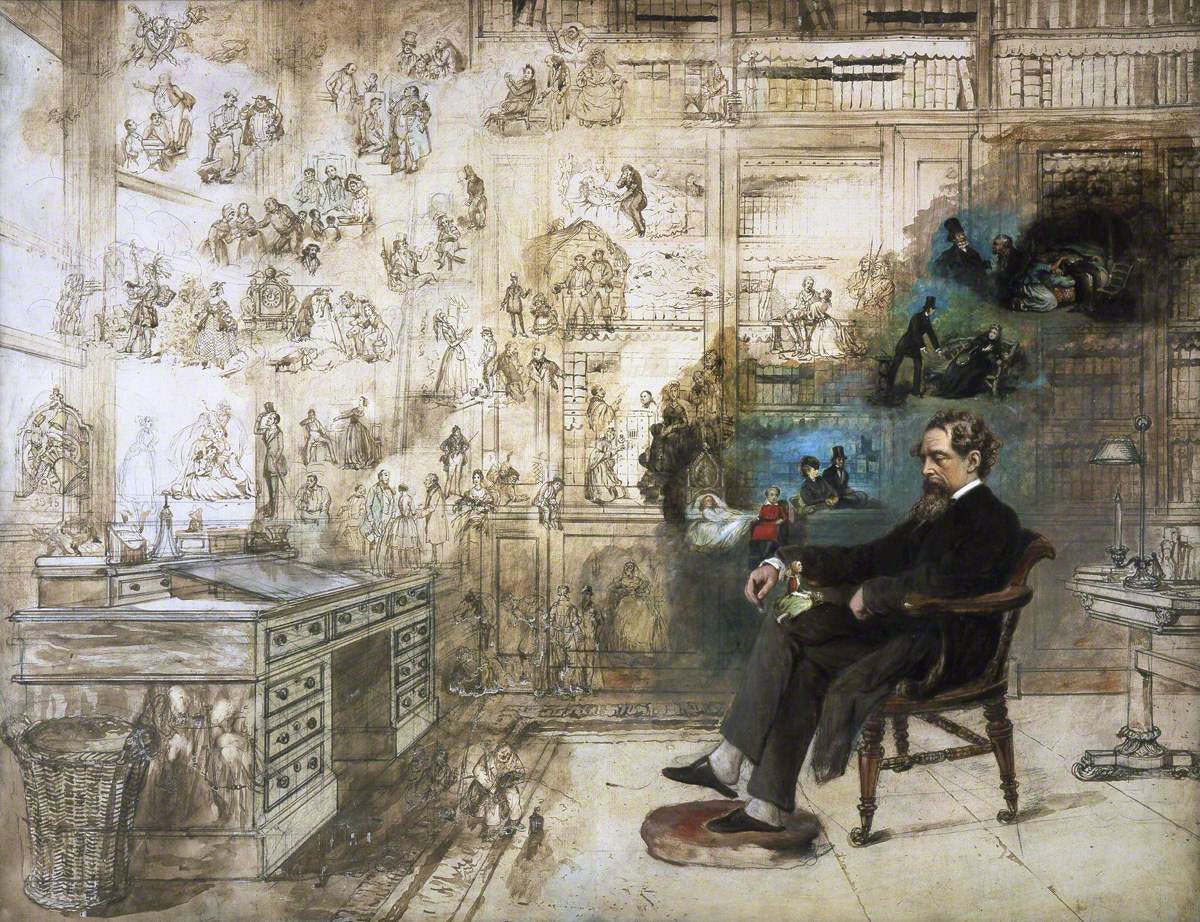

One suspects that Erik Hoel, who is also a novelist, truly cares less about explaining dreams than another, related phenomenon: fictional stories.

The hypothesis I’ve outlined above is explained in detail in his academic paper titled “The Overfitted Brain.” At the very end, in a single paragraph that feels like a rewarding opening of the world after spending a while dealing with the minutiae of neuroscience, he writes:

The [Overfitted Brain Hypothesis] suggests fictions, and perhaps the arts in general, may actually have a deeper underlying cognitive utility in the form of improving generalization and preventing overfitting by acting as artificial dreams.

As he also explains more extensively in his essay “Exit the supersensorium,” fiction, from myths to novels to movies to video games, is rather puzzling. Indeed, it’s another of those weirdly general mysteries, like beauty and dreaming itself. Fiction is literally false information. Why do we care so much about sequences of events that explicitly didn’t happen?

Just like with dreams, we can make up ad hoc theories. All of them have serious problems. Fictions are sometimes useful to learn complicated stuff that is difficult to teach in nonfictional format, but clearly a lot of fiction isn’t concerned with teaching at all. They might have benefits like encouraging moral behavior, as in the case of some religious narratives, but that’s also not general enough: most stories are morally neutral, some are even morally evil. Fictions are sometimes beautiful, but that’s just kicking the can to the even trickier question of why we care about beauty. (More on that later.) Maybe fictions serve no purpose, and are just a side effect of other psychological phenomena, a superstimulus that some clever shamans and storytellers discovered and that they’ve been taking advantage of ever since. But then we might expect to have developed cultural defences against it. Instead, well-crafted fictions are, today still, prestigious and sought after.

Fictions share enough with dreams that the same explanation is probably true of both. Stories, like dreams, tend to be sparse. A fiction never has the same resolution as real life; even the most elaborate imaginary universes, like Tolkien’s Middle-earth, feel orders of magnitude less rich than actual Earth. Stories can also be various levels of hallucinatory, from slightly fictionalized autobiographies all the way to deliberately nonsensical tales like Alice in Wonderland. And of course, stories are narrative by definition.

Therefore, fictions might be, quite literally, artificial dreams. Our love of them might have evolved for the same reason as dreams did: to avoid overfit and deal better with new data points, i.e., new experiences.

It’s not difficult to see that this hypothesis makes intuitive sense in a variety of situations. Who consumes the most fiction? Children, who are spending almost all their time learning how everything works. We’re notably not worried, when we give them stories with big colorful monsters, or tell them about Santa Claus, that they’re going to learn the wrong model of the world. Instead, we’re “stimulating their imagination.”

How can we increase our creativity? I can’t speak for everyone, but I tend to be way better at idea generation when I’ve been reading good novels. For whatever reason, reading nonfiction doesn’t produce the same effect.

Why do some people like fictions that are literally designed to produce negative emotions, like horror movies? Probably for the same reason that nightmares exist: because sometimes the world is actually scary, and it’s useful to be able to react to that.

There are signs that fictions have played a deep role in human history. Yuval Noah Harari, the author of Sapiens, famously argues that humans rule the world thanks to our ability to believe in imaginary things — like religions, money, and countries. If so, then the way we react to fictional stories has had major importance at least in cultural evolution.

If Hoel’s Overfitted Brain Hypothesis is true, then fiction has had major importance in biological evolution as well. Our distant ancestors survived, and gave birth to our lineage, because they evolved complex brains that were adaptable enough to new situations — and that involved this strange mechanism we call dreaming. From there, the early Homo sapiens were able to invent tales, and myths, and gods, and kingdoms, and economies, and those hundreds and thousands of little fictions that hold the world together.

IV. In which an attempt is made at explaining why novelty is often beautiful

From fiction there is but a short step to art in general, and Erik Hoel does extrapolate, briefly, in that direction. What’s the purpose of art? That’s another difficult question to answer in general terms, but again, “to avoid overfitting” works quite well.

Even when it’s not in narrative form, art can serve as artificial data augmentation. When an abstract painting shows you an interesting shape you’ve never seen before, or when a piece of music brings to your attention a peculiar pattern of sounds, you learn extra data that goes beyond the usual experience of your life. It’s not “real” data in that the natural world is unlikely to contain those exact shapes or sounds, but your brain doesn’t know that. It only knows the experience of your life so far, which does not even come close to containing all shapes and sounds that you could ever see or hear.

These considerations tie into the general mystery I mentioned at the beginning of the post: beauty. Long-time readers of this blog know that I’ve been puzzling quite a lot over why the ability to see beauty exists. My main hypothesis is that beauty evolved as a form of curiosity towards things that are good for us.

Of course, “good for us” is extremely general still. Much of it is learned: once you know that eating vegetables is good for you, then you’re more likely to find vegetables beautiful. But some of it is innate, notably when it comes to the beauty of other people, which matters a lot for reproduction, which in turn matters a lot for evolution.

Outside of specific functions like sex, a big innate contributor to the perception of goodness, and therefore beauty, is novelty. We commonly perceive things we haven’t seen before as more beautiful than their intrinsic properties would otherwise suggest.

For example, it’s common for me to stumble upon a gorgeous image online or in a store, and feel a compulsion to acquire a printed version that I can hang on my wall. But then I wait a bit, and when I look at it the next day, the picture feels just okay, not gorgeous anymore. Clearly the novelty has worn off, and that has a direct effect on my aesthetic appreciation. Some would say that the novelty made the picture feel more beautiful than it “truly” was, but the simpler explanation is that novelty is one of the many variables that enter into our complex aesthetic judgments.

Why do we care about novelty? Probably because acquiring new data is good. If we weren’t attracted to new data, then we would learn poorly, and be less good at survival and reproduction. So it seems likely that, at some point, nervous systems evolved the capacity to react positively to new information, and make themselves feel good about it. Our ancestors avoided trouble better than other animals, because their neurons generated a pleasurable substance whenever they encountered new life experiences, allowing them to avoid overfitting to what they already knew. When something unusual happened — the arrival of a predator, or a rare weather event — they were more likely to survive.

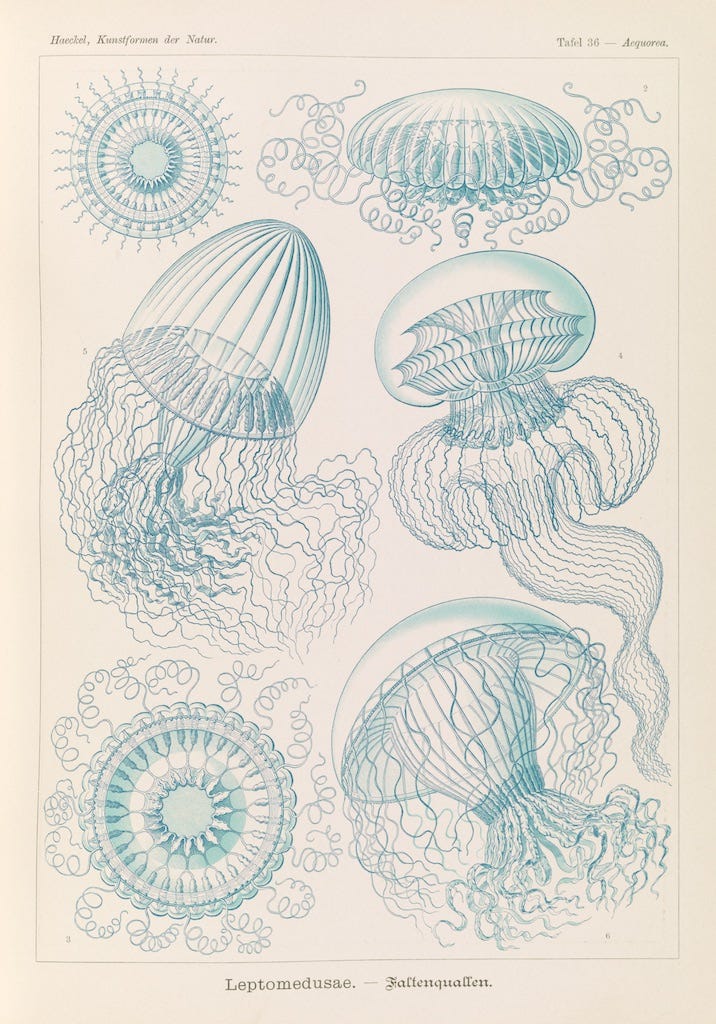

This surely happened in extremely ancient times — perhaps going back to the first hints of brains in the “nerve nets” of jellyfish and their cnidarian brethren, more than 500 million years ago. Only later would the more specialized mechanisms of dream and fiction appear in the lineages with big brains.

An interesting consequence of this reasoning is that we might expect all intelligent beings, whether evolved or designed, to care about novelty. Novelty is more fundamental than the goodness that is due to culture, like the Western music scale or clothing fashions, and to our biological nature, like symmetrical faces or fresh food. It is at least on par with the beauty of certain mathematical truths.

And so a true sign of intelligence might be the ability to find beauty in novelty. If an entity didn’t, it would be way too vulnerable to overfit, and as a result be less intelligent than it otherwise could be.

V. In which the doors of broad generalization are thrown wide open

Let’s go further. This post is called “The Overfit Theory of Everything,” so let’s generalize to everything.

Why do we like tourism? We put ourselves in deliberately less comfortable situations, paying good money to do so, and spend most of our time looking at famous buildings we already knew about from photos. Superficially, it makes little sense. Yet it seems clear that travelling is great at preventing overfit. When you’re far from home, there are a myriad of small details that change from the ordinary life you’re used (and overfitted) to.

Why is exercise good for you? It causes stress to your body, it puts you out of breath, it sometimes causes injuries — but it also forces you to perform gestures you usually don’t in your (presumably) sedentary lifestyle. Your muscles are overfitted to sitting and doing nothing; exercise helps prevent that, which in turn makes you healthier.

What is boredom? It is a sign that you are overfitted to your daily routine. When you’ve been watching TV a lot and it has become stale, that’s a sign your brain is sending you that you need to go out and do new things, because it fears that your stay-at-home behavior has left you with too little data.

Why is meeting people fun? I don’t need to spell it out at this point, but yeah, because each person brings a unique perspective on almost everything, and that’s good data. I suspect that the need to socialize, which is surprisingly visceral sometimes, is caused by the same mechanism that make us feel boredom and appreciation for novelty. And of course, this is of consequence in the romantic and sexual spheres, too.

Okay, one last one: Why is diversity important? This is another of those extremely general mysteries — diversity matters in such various realms as biology, culture, human identities, finance and other random systems, even political opinions and systems, as well as moral values. The overarching answer to all of these is that diversity provides extra data that allows us to avoid overfitting. A liberal democracy is better than an autocratic monarchy, even though a monarchy can be more “efficient,” because democratic debate allows us to avoid overfitting to a small set of policies. A network of startups is superior to a centralized organization, because the centralized organization is always at risk of overfitting on whatever business ideas have worked previously. A company made of diverse people (in ethnicity, sex, age, class, and opinions) is better than a homogenous one, because it is less likely to overfit on a small number of assumptions due to its staff.

I could go on and on. I could recast my essay on the three kinds of happiness in this mold, pointing out that people who seek “psychological richness” are those who are more sensitive to overfitting. I could say that the entire point of studying history or caring about the classics is to avoid overfitting on the present. I could offer career advice and suggest that you quit your boring job to seek diverse problems to solve, and therefore reduce the chances that you overfit to a single problem you don’t even care about.

I could go on and on, which means it might be worth addressing a very valid concern: am I overreaching? Am I, ironically, overfitting my theory of overfit to everything I can think of?

VI. In which the irony at the core of this argument is addressed

Am I guilty of overfitting? The answer is, probably yes! But I’m doing it deliberately, so it’s fine.

It’s worth emphasizing that Erik Hoel’s ideas on dreams and fiction are a hypothesis. It’s not necessarily confirmed by hard evidence — so take everything here with a grain of salt. For instance, the biological basis of what I’ve been discussing is that our brains produce a feel-good neurotransmitter when they see new things, which might not be a good description of what truly happens. The evolutionary psychology angle might be all wrong, as evolutionary psychology often is. Or it might turn out that dreams really did evolve for memory consolidation or whatever.

Nevertheless, I think it’s also worth pretending there is an Overfit Theory of Everything. The main reason is that it’s a really powerful metaphor. It really does help make sense of the highly general mysteries of dreams, fiction, art, novelty, and probably some others I haven’t even thought of. Yet overfit isn’t a widely known concept outside of a small number of people who are familiar with machine learning. So take is as an extra tool, a mathematical justification for common sense concepts like “stimulating your imagination” and “having diverse life experiences.”

My other reason to care about this is more personal. As I was reading Erik’s supersensorium essay, I got a sudden and strong feeling that it contained some kind of missing link in the intellectual worldview I’ve been slowly building here. There are, I suspect, a few deep and important ideas that exist somewhere in the space between the topics I’ve been writing about — such as aesthetics, diversity, evolution, awareness, and optimization. I think overfit might be, so far, the most promising candidate to link it all together.

I didn’t expect it to come — not quite literally, but almost — from dreams, but hey, weirder things have come from the hallucinatory tales that our brains have been staging for us at night, every night, for the past several million years.

Erik's essay made a big impact on me when I first read it and informs a lot of my thinking still. I love how it takes dreams at their phenomenological content, which is where I've always found other theories of dreams sorely lacking. Whenever I'm in a rut and not enjoying the stuff I usually do, I remember I probably just need to go out and find some new input data!

This is honestly a fascinating theory. My personal craving for the odd and weird could’ve been easily explained by it, and much more.

There’s another curious concept, which I think is closely related to this overfitting theory. It’s called ‘Defamiliarizarion’, it’s a literary technique documented by Viktor Shklovsky at the beginning of the 20th century. The idea is in deliberately making prose in a way that feels ‘weird’ / ‘strange’, even otherworldly, for a reader. For example, using unusual turns of phrases, words, rhythms, using unusual PoV (see Tolstoy’s Kholstomer, a story told from a perspective of a horse, pondering on topics such as private property etc) and other devices—it can be anything, not just words. The idea behind that is reading, as everything else, becomes habitual and we stop really paying attention and absorbing the text, it becomes too easy, almost automatic. It might seem as a good thing, but from a perspective of ‘living’ and ‘experiencing’, it’s rather not. Here’s a piece from Tolstoy’s diary:

> I was cleaning and, meandering about, approached the divan and couldn't remember whether or not I had dusted it. Since these movements are habitual and unconscious I could not remember and felt that it was impossible to remember - so that if I had dusted it and forgot - that is, had acted unconsciously, then it was the same as if I had not. If some conscious person had been watching, then the fact could be established. If, however, no one was looking, or looking on unconsciously, if the whole complex lives of many people go on unconsciously, then such lives are as if they had never been.

This applies well to reading. How much do we remember from books we read? What stands out the most? For me, it’s style of the prose and any unusual unique details, rather than plot.

Shklovsky suggested "enstranging" / defamiliarising objects by complicating the way they are described. Shklovsky wrote, that you cannot truly experience something without sufficient intellectual efforts. But if as a writer, you can make objects unfamiliar to the reader, "defamiliarize" them, and provide the reader with a fresh view free from the automatism.

I wrote an essay about it some time ago, but since then I have accumulated more thoughts about the topic, so I unpublished it to rework later. It seems now it’s the right time. I can incorporate the overfitting theory as well (plus exploration/exploitation and such, btw). So, thank you for writing this, Étienne. Both you and Erik have a special power in waking up (great) ideas in my head.

P.S. I can email you my old now-draft if you’re interested.